141x Filetype PDF File size 0.07 MB Source: www1.se.cuhk.edu.hk

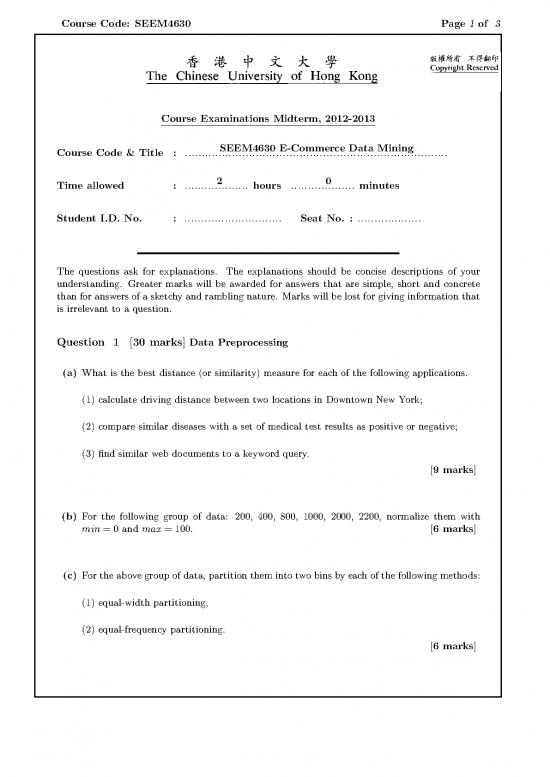

Course Code: SEEM4630 Page 1 of 3

Course Examinations Midterm, 2012-2013

SEEM4630 E-Commerce Data Mining

Course Code & Title : ..............................................................................

2 0

Time allowed : . . . . . . . . . . . . . . . . . . . hours ................... minutes

Student I.D. No. : . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Seat No. : ...................

The questions ask for explanations. The explanations should be concise descriptions of your

understanding. Greater marks will be awarded for answers that are simple, short and concrete

than for answers of a sketchy and rambling nature. Marks will be lost for giving information that

is irrelevant to a question.

Question 1 [30 marks] Data Preprocessing

(a) What is the best distance (or similarity) measure for each of the following applications.

(1) calculate driving distance between two locations in Downtown New York;

(2) compare similar diseases with a set of medical test results as positive or negative;

(3) find similar web documents to a keyword query.

[9 marks]

(b) For the following group of data: 200, 400, 800, 1000, 2000, 2200, normalize them with

min=0andmax=100. [6 marks]

(c) For the above group of data, partition them into two bins by each of the following methods:

(1) equal-width partitioning,

(2) equal-frequency partitioning.

[6 marks]

Course Code: SEEM4630 Page 2 of 3

(d) For the following two vectors, p = [1,1,0,0,0,0,1,0,0,0] and q = [0,1,0,0,0,0,1,0,1,0],

compute the following similarities:

(1) Simple Matching Similarity,

(2) Jaccard Similarity,

(3) Cosine Similarity.

[9 marks]

Question 2 [30 marks] Decision Tree Induction

Consider the training dataset shown in Table 1.

A B ClassLabel

0 1 c1

0 0 c2

1 1 c1

0 1 c1

1 0 c1

0 0 c2

1 1 c1

0 0 c2

1 0 c1

1 0 c2

Table 1: A Training Dataset for Questions 2 and 3

(a) Calculate the gain in the Gini index when splitting on attributes A and B, respectively.

Show your calculation details. According to the gain, which one will you choose as the first

attribute to split in the decision tree induction? [15 marks]

(b) Calculate the gain in the misclassification error when splitting on attributes A and B,

respectively. Show your calculation details. According to the gain, which one will you

choose as the first attribute to split in the decision tree induction? [15 marks]

Course Code: SEEM4630 Page 3 of 3

Question 3 [20 marks] Naive Bayes Classification

Consider the training dataset shown in Table 1, and answer the following questions.

(a) Compute the conditional probabilities P(A = 1|C = c1), P(A = 0|C = c1), P(B = 1|C =

c1), P(B = 0|C = c1), P(A = 1|C = c2), P(A = 0|C = c2), P(B = 1|C = c2), and

P(B =0|C =c2). [12 marks]

(b) Use the computed conditional probabilities to predict the class label for a test sample

(A=1,B=0)using the naive Bayes approach. [8 marks]

Question 4 [20 marks] Classification Accuracy and Cost

Table 2 shows a confusion matrix and a cost matrix for a two-class problem. Calculate the

following measures:

Predicted + Predicted - Predicted + Predicted -

True + 100 40 True + -1 100

True - 60 300 True - 20 0

(a) Confusion Matrix (b) Cost Matrix

Table 2: Confusion and Cost Matrices for Question 4

(a) Accuracy, [4 marks]

(b) Misclassification cost, [4 marks]

(c) Precision, [4 marks]

(d) Recall, [4 marks]

(e) F-measure. [4 marks]

-End-

no reviews yet

Please Login to review.